InSightLight-based sensor system helping the visually impaired detect objects

To maintain independence and navigate their surroundings, many visually impaired people use a white cane to identify upcoming obstacles. But the low-tech tool is flawed in that it’s unable to detect obstacles above chest level like tree branches or overhangs, which leads to accidents. However, more advanced technology, like wearable devices that can better sense a person’s surroundings, can be as expensive as $300 to $1,100.

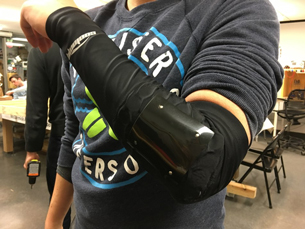

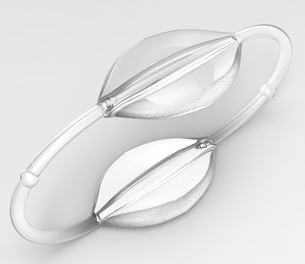

In just 10 weeks, students in COMP_SCI 338: Practicum in Intelligent Information Systems developed InSight, a light-based sensor system that can be worn on the hand to help the visually impaired detect objects – and it costs less than $35 to make each one.

The ubiquitous tool used by the visually impaired is a white cane, which is great, but it hasn't been updated in a long time. We wanted to see if we could improve or work in tandem with it to give users a more high-fidelity view of the world.

How it Works:

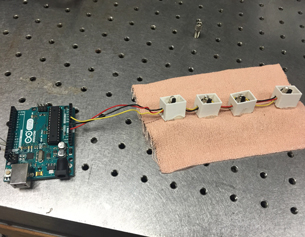

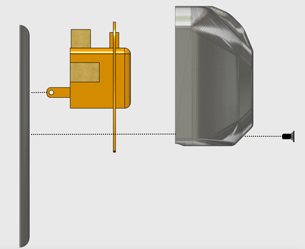

The students experimented on a number of sensors coupled with small computer processors, and found a sonar sensor could take in information based on how far a person is away from an object. When held out by an outstretched arm, a haptic motor and a speaker inside the device will vibrate and make a sound when an object is identified. As the user gets closer to the object, the sound will vary in pitch, and the vibration will become stronger.

Benefits:

- Identifies surrounding objects in all directions

- Low-cost production < $35

- Minimizes accidents and injuries

- Collects data to provide users with higher quality information about their surroundings

Development Process:

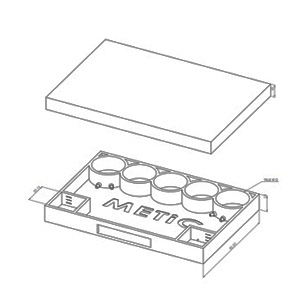

At the start of the 10-week class, the group researched the components needed to create a light-sensor system. The students then created a basic prototype using a microcontroller packaged into a small box, mounted on an arm brace.

The group then began testing out the prototype around campus with their teammate, Batuhan Demir (’21), who is visually impaired has used a white cane to navigate his surroundings for more than 10 years.

“I am very excited about the development of InSight as we have done an amazing prototype in just 10 weeks for me to start feeling more confident while walking around the Northwestern campus,” Demir said. “I’m also excited about the future capabilities of InSight and its potential to make the 253 million visually impaired people worldwide more independent.”

Current status:

The students shared their prototype with the Northwestern community during final presentations for COMP_SCI 338. Some of the team members plan to conduct an independent study with Kris Hammond, Bill and Cathy Osborn Professor of Computer Science, to continue refining the product.

The students will work out challenges like light sensitivity in the sunlight. Hammond has challenged the students to enhance the device by developing capabilities to create a 3D map that saves locally, so when users move around a familiar space, they can access a map of their surroundings through haptics and sounds.

Team Members Batuhan Demir (political science ’21), Joshua Klein (computer science ’20), Biraj Parikh (computer science ’20), Harrison Pearl (computer science ’20), Jodie Wei (computer science ’19)

AdviserKris Hammond, Bill and Cathy Osborn Professor of Computer Science